What are your two favorite things to wear?

Hoodies….they are like comfort food for my skin.

Fashion forward clothes that make me look great…so I feel confident.

Research and commentary at the intersection of psychology, technology, and ethics. Exploring what it means to stay human in an age of intelligent machines.

What are your two favorite things to wear?

Hoodies….they are like comfort food for my skin.

Fashion forward clothes that make me look great…so I feel confident.

Click link for the FULL Paper (GitHub)

Or view substack for more info https://stabilitycapitalism.substack.com

What You Get

How It Works (In Plain English)

Who Pays?

Only big tech + big corporations :

Why This Matters

What It’s NOT

Why It’s Guaranteed to Work

$3,000/month for every working-age American.

No new taxes, No debt, No BS. Just a tiny slice of AI profits funding human stability.

NOTE: This is a Policy Paper, it is not actual fact, it has not been passed into Law. If you see the value in it please reach out to your representative.

-Updated 11/23/25

We are living through the fastest labor disruption in modern history.

AI and robotics are eliminating or transforming jobs three times faster than economists predicted just a few years ago. In the first ten months of 2025 alone, American companies announced 1.1 million job cuts—65% more than the same period last year. Verizon, Amazon, Bosch, Accenture, Microsoft, and Ford have announced massive restructures tied directly to automation and AI adoption. The people getting hit hardest are 47-50 years old, at the exact moment most families are earning and saving the most for retirement.

The problem isn’t technology. The problem is that our current economic systems were never designed for this pace of change.

UBI, robot taxes, retraining grants—these are solutions built for a slower world. They redistribute the pain, not the progress. What we need now is a way for people to share directly in the productivity of the machines that are replacing them.

This essay introduces the concept of Stability Capitalism and the AI Dividend—a neutral, innovation-friendly model inspired by Alaska’s 40-year-old Permanent Fund (run by Republicans) and Finland’s world-leading State Pension Fund (VER). Instead of punishing companies for adopting automation, it ensures every U.S. citizen aged 18-66 in households earning $75,000 or less receives $3,000 per month, direct deposited. Current Social Security recipients keep 100% of their benefits—guaranteed for life.

Because the truth is simple:

If AI is powerful enough to replace us, it’s powerful enough to pay us.

The Cliff Has Arrived

We are already standing at the edge. In the first ten months of 2025, U.S. companies announced 1.1 million job cuts—a 65% surge from 2024. Amazon’s 14,000 corporate layoffs, Bosch’s 13,000 cuts, Accenture’s 11,000, and Microsoft’s 9,100 show that this is not a theoretical debate—it’s a structural shift already underway.

The unemployment rate has barely moved (up just 0.1%), but that number hides the truth: one in four of the jobless—1.8 million people—has been out of work for more than six months. When benefits run out, many simply stop being counted.

Rehiring is brutal. Only 40-50% of laid-off workers land at the same or higher wage; the rest take a 10-20% pay cut. And the people getting hit hardest are not twenty-somethings. They are 47-50 years old, the exact moment most families are earning and saving the most for retirement.

Workers aged 45 and older now make up 55-65% of all layoffs—a 45% increase from 2024. The Technology sector has been hit hardest, wiping out higher-paying jobs. Even stripping out government-shutdown noise, private-sector cuts are up 56%, with wage losses up 87% because the cuts are concentrated in higher-wage roles.

McKinsey, the World Economic Forum, and Oxford project 85-140 million U.S. jobs displaced by AI in the next decade. Manufacturing automation has already replaced millions of jobs worldwide since 2000, and the pace is accelerating.

Economists once believed this transition would stretch through the 2030s. Instead, the combination of generative AI, robotics, and algorithmic management has compressed a decade of change into three years.

This is not an anti-technology argument. It’s a pro-human one. Progress without distribution is collapse in disguise.

The Perfect Storm—Productivity Without People

Automation boosts output but channels its rewards to capital, not workers. From 2000 to 2024, the share of national income going to labor has fallen in nearly every major economy while profits have soared. A handful of individuals now control more wealth than ever in human history, while real median wages stagnate. The world has created its most productive generation of machines—and its most precarious generation of people.

If purchasing power collapses, even efficient markets stall. Every innovation cycle of the past two centuries—from the spinning jenny to the semiconductor—has eventually created new categories of work. But generative AI may be the first technology that produces value without proportionate labor demand. When the output of intelligence itself is automated, “retraining” entire populations becomes mathematically impossible.

We can’t take a 55-year-old data analyst, hand them a six-week course, and expect them to magically become a plumber. Meanwhile, they’ve already lost their job and are staring down the very real possibility of losing their home, their car, and their savings.

And let’s be honest: the high-paying white-collar jobs being automated away are not being replaced with equivalent roles. They’re being replaced with jobs that are lower-paying, physically demanding, and require years of hands-on experience, formal trade training, and state-specific certifications.

Take plumbing as an example. In many states, it takes 4-5 years of supervised work just to become a journeyman plumber—and 7-10 years to reach the top end of the field earning around $120K+. Apprentices typically start at $16-$24/hour, spend years in that stage, and then graduate to journeyman pay at $28-$38/hour. Only after years more in the industry do they qualify as a master plumber earning $40-$55/hour. And if they eventually own a small plumbing business, they might clear $150K-$250K+, but only if they manage overhead well and have enough techs working under them.

This is not a quick pivot. It is not a six-week transition plan. It is a years-long reinvention, and most displaced workers simply don’t have that kind of time, money, or physical capacity during an economic freefall.

There’s one more piece almost everyone leaves out of this conversation. We are heading into the largest retirement wave in U.S. history. A massive demographic shift is already underway: more people are exiting the workforce than entering it. With fewer workers, fewer high-paying jobs, and fewer taxable wages in the economy, we have to ask a hard question:

Where will the support come from to care for the disabled, the elderly, and the millions of people who rely on safety-net programs?

If our economic base shrinks while our dependent population grows, the math simply doesn’t work. Ignoring that reality won’t make the problem disappear.

Existing Responses — and Why They Fall Short

Governments and economists are not ignoring the problem; they are simply using the wrong tools for this phase of automation.

Universal Basic Income (UBI)—championed by Andrew Yang and the Basic Income Earth Network—would give every citizen a fixed stipend funded by general taxation. Its flaw is political and fiscal: it treats symptoms rather than causes and lacks any tie to the sources of machine productivity.

Robot Taxes, suggested by Bill Gates and trialed in South Korea, mimic payroll taxes for automated systems. They sound simple but discourage innovation and drive automation offshore.

Automation Adjustment Taxes (AAT) from MIT’s Daron Acemoglu or Brookings analysts aim to capture firms’ AI-related gains for retraining programs, but they are nearly impossible to measure consistently.

Data Dividends and training credits focus on digital platforms or reskilling grants, yet they remain piecemeal and temporary.

All share one limitation: they redistribute revenue after the fact instead of linking social benefit to the moment of value creation. They see automation as a problem to offset, not a shared asset to harness.

If AI is powerful enough to replace us, it’s powerful enough to pay us.

The AI Dividend Framework: Turning Automation into Shared Prosperity

If automation removes the human from production, it must also remove the barrier between citizens and the wealth it creates.

The AI Dividend does this through a simple mechanism: every U.S. citizen aged 18-66 in households earning $75,000 or less receives $3,000 per month, direct deposited—no apps, no tokens, no bureaucracy.

Current Social Security recipients keep 100% of their benefits, with automatic top-ups if they’re below $3,000/month. No senior ever receives less than an inflation-adjusted $3,000 per month—guaranteed for life.

The entire program is funded by a 0.054-cent fee on every commercial AI inference, collected at the U.S. cloud endpoint from Amazon, Microsoft, Google, OpenAI, and every other provider.

Zero new taxes. Zero deficit impact.

Cloud Endpoint Collection

The money comes from a 0.054-cent fee on commercial AI inferences—collected automatically at cloud endpoints (AWS, Azure, Google Cloud, OpenAI, Anthropic, xAI, etc.) every time a company uses AI compute. This is roughly 5.4 cents per $100 of corporate AI spend, less than one-twentieth of one percent of cloud providers’ gross margins.

The fee is baked into billing APIs, just like digital services taxes already are in 15+ countries. Cloud providers already track every millisecond of compute usage for billing purposes. Adding a dividend fee is a software update, not a revolution.

With 3.1 trillion inferences projected in 2026, that’s $1.62 trillion in revenue—enough to fund the entire dividend with surplus left over.

Why this works:

The required infrastructure already exists. Cloud providers measure compute usage down to the millisecond, and transaction-level billing is routine across digital platforms. This isn’t a penalty; it’s a participation share. The more automation scales, the larger the shared pool becomes.

If Amazon Web Services can charge for a 300-millisecond inference, it can log a 0.054-cent Automation Dividend at the same time.

Direct Payment

The dividend hits your checking account the same way stimulus checks and Social Security do, direct deposit via the IRS and Social Security Administration infrastructure already in place. No blockchain wallets, no new technology, no friction.

If you can receive a tax refund, you can receive the AI Dividend.

No tokens. No wallets. No apps. No new bureaucracy.

Surplus Investment

Any surplus beyond the $1.62 trillion needed annually flows into a sovereign wealth fund modeled on Finland’s State Pension Fund (VER), which has averaged 8-9% real returns for decades. By 2040, this fund alone grows to $9-10 trillion—enough to replace Social Security and begin funding healthcare, forever.

The machines are coming for the jobs. We can let them take the country, or we can make them pay the rent and grow the dividend richer every year.

Working-age Americans: $3,000/month base income for ages 18-66 in households earning ≤$75k

Current retirees: 100% of existing Social Security benefits protected—no cuts, no reductions, ever

People earning more: The dividend phases out smoothly between $75k-$110k household income; above that, you keep every dollar you earn with lower effective tax rates

This isn’t about replacing work—it’s about ensuring that when machines take jobs, machines pay the rent.

Learning from Alaska

Alaska has run a citizen dividend for over 40 years—funded by oil revenues, managed by Republicans, with zero inflation impact. Every resident receives an annual check from the Alaska Permanent Fund. It has broad bipartisan support because it’s framed as resource-sharing, not welfare.

The AI Dividend applies the same model to a different resource: artificial intelligence.

It is not welfare. It is the Alaska Permanent Fund Dividend (run by Republicans for 40 years), applied to the new resource: artificial intelligence.

Learning from Finland

Finland’s State Pension Fund (VER) offers the template for surplus management.

Founded in 1990 to prepare for future pension liabilities, VER invests across equities, fixed income, and infrastructure. It averages about 9% nominal (8.2% real) annual returns and operates with strict transparency and ethical screens.

Adaptation: The AI Dividend Fund

We all share in the productivity of machines—they don’t replace us; they contribute to us.

Why It Works

Economically Sound – The fund grows automatically with automation’s reach; it doesn’t require new debt or distortionary taxes.

Politically Viable – Framed as profit-sharing, not punishment, it encourages innovation rather than stifling it. Republicans have run Alaska’s version for 40 years.

Technically Feasible – Cloud providers already measure compute use down to the millisecond; connecting those metrics to dividend distribution is achievable with existing infrastructure.

Socially Stabilizing – It keeps purchasing power in circulation and dignity intact. Displaced workers become shareholders in progress.

Population Growth Engine – $3,000/month removes money stress from fertility decisions. South Korea cash-transfer pilots (2023-2025) showed +18% birth-rate increases when women felt financially safe. U.S. births could rebound from 3.6 million (2025) to 4.2-4.4 million per year by 2035, fixing the Social Security worker-to-retiree ratio collapse.

Every structural change has trade-offs. The question is whether we design them—or let them happen to us.

Measurement – The fee is collected at the source: cloud endpoints track every inference for billing. No guesswork needed.

Governance – An independent Automation Trust overseen by multiple stakeholders—governments, civil-society groups, technologists, and labor representatives—prevents capture by any single interest.

Inflation and equity balance – Like Alaska’s oil fund, the dividend rises only when real output rises. That keeps incentives to innovate while protecting long-term purchasing power.

Housing inflation – The real worry is landlords capturing the dividend through rent hikes. The solution: companion policies including Land Value Tax (LVT), YIMBY zoning reform, and rent caps on empty units. These policies recapture 70%+ of rent-seeking while increasing housing supply.

Public understanding – When an AI system replaces 100 workers, those 100 should still share in its output. Framing it that way turns abstraction into fairness most people can grasp.

Implementation Roadmap

Pilot Phase (2026-2028)

National Scaling (2028-2030)

Ideal: Global Fund Integration (post-2030)

This timeline mirrors how carbon markets and pension funds matured: pilot → prove → federate.

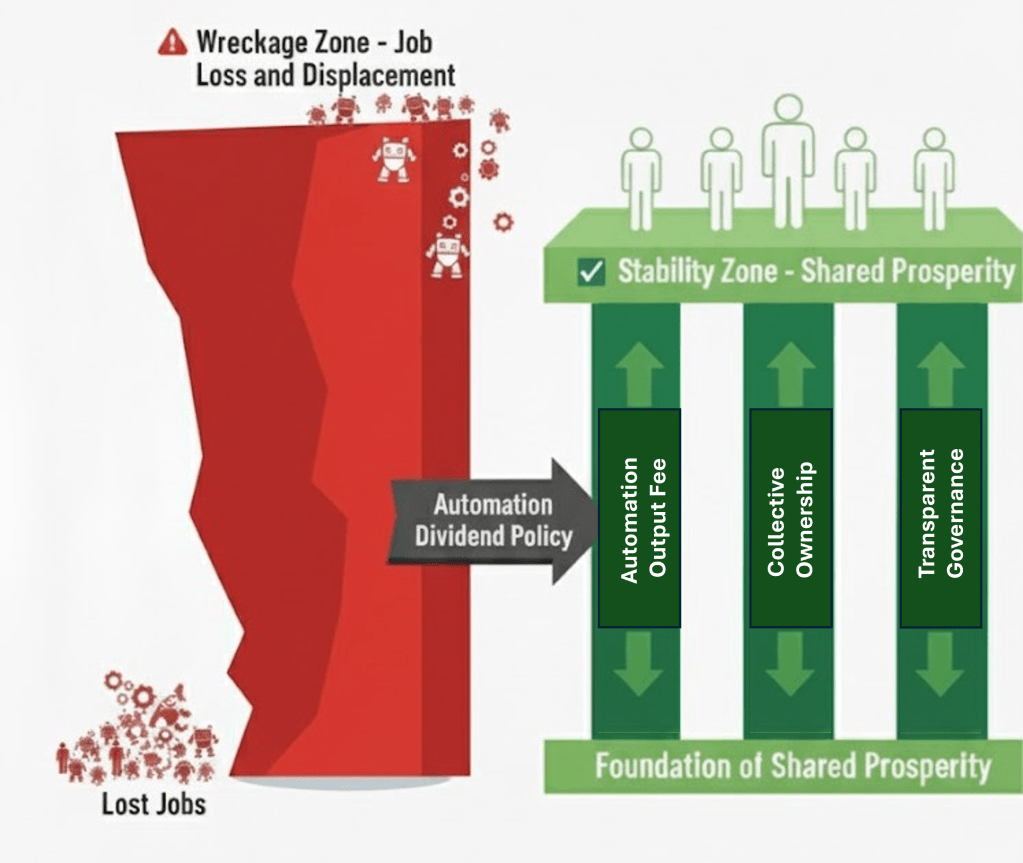

The Bridge from Wreckage to Stability

Without action, RAND and the Congressional Budget Office estimate a cumulative $15-30 trillion loss to U.S. GDP by 2040 from collapsing demand, shrinking tax bases, and rising social instability.

History is unambiguous: when inequality accelerates this fast, societies either reform or fracture.

Stability Capitalism is the reform that works.

If we build it, automation becomes not a destroyer of livelihoods but a generator of collective wealth.

Fail, and the cliff only widens.

We built the machines. We can build the bridge—before the fall.

References

Acemoglu, D. & Johnson, S. (2023). Power and Progress.

Alaska Permanent Fund Corporation (2025). Annual Report 2025. https://apfc.org/annual-reports

Bureau of Labor Statistics (2025). Employment situation – September 2025 (USDL-25-1487). https://www.bls.gov/news.release/empsit.nr0.htm

Challenger, Gray & Christmas (2025). Layoff report – October 2025. https://www.challengergray.com/blog/2025-layoff-report-october

Congressional Budget Office (2025). The budget and economic outlook: 2025 to 2035. https://www.cbo.gov/publication/60870

McKinsey Global Institute (2025). The state of AI in 2025: Agents, innovation, and transformation. https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-state-of-ai-in-2025

OECD (2024). Who Will Be the Workers Most Affected by AI?

Oxford Martin School (2025). AI exposure predicts unemployment risk: A new approach to technology-driven job loss. PNAS Nexus, 4(4), pgaf107.

Pew Research Center (2025). AI risks, opportunities, regulation: Views of US public and AI experts. https://www.pewresearch.org/internet/2025/04/03/views-of-risks-opportunities-and-regulation-of-ai

RAND Corporation (2025). Macroeconomic implications of artificial intelligence (PE-A3888-3). https://www.rand.org/pubs/perspectives/PEA3888-3.html

State Pension Fund of Finland (VER) (2025). Annual Report 2025. https://www.ver.fi/en/annual-report-2025

Urban Institute (2025). The impact of AI on mid-career workers. https://www.urban.org/research/publication/impact-ai-mid-career-workers

World Economic Forum (2025). The future of jobs report 2025. https://www.weforum.org/publications/the-future-of-jobs-report-2025

For the full technical blueprint, economic modeling, and implementation details, read the complete Stability Capitalism white paper at ——

Artificial intelligence is sold to us as an oracle — a near-magical assistant that can answer any question, solve problems, and even help us think. But here’s the question nobody asks: who owns the oracle you’re peering into? Because ownership isn’t just business. In countries where governments have their hands on the levers, it means direct control.

I learned this in the automotive industry. Any company operating in China is tethered to the state — through ownership stakes, Party committees, or laws that compel cooperation. You don’t get to say no. It’s not paranoia; it’s structure. And now, the same system is wrapped around AI.

China’s National Intelligence Law (2017) requires every citizen and company to “support, assist and cooperate with state intelligence work.” Large firms are required to host Party committees inside their walls. The government often holds “golden shares,” which give it veto power.

What does that mean for AI?

And here’s the kicker: even Western tech giants sometimes blur the lines. Google Cloud has made DeepSeek models available through its Vertex AI platform. That means a state-tethered Chinese model can slip quietly into Western business pipelines. It’s not a hypothetical risk — the entanglement is already happening.

When Western companies make mistakes, regulators fine them, lawsuits follow, and the press calls them out. In China, criticism gets censored, and the model keeps shaping truth to fit the Party line.

Russia plays a different but familiar game. The Kremlin doesn’t need golden shares — it has SORM surveillance laws, and companies operate knowing compliance isn’t optional.

The ecosystem:

If you think of these as neutral tech tools, you’re missing the point. They’re control infrastructure dressed as convenience.

Don’t assume the pattern stops there. Other countries are following the same playbook in different flavors:

China, Russia, India, the UAE — not identical systems, but overlapping DNA:

The difference with Western firms isn’t sainthood. It’s that they face checks and balances — transparency reports, independent press, court challenges, regulatory penalties. Flawed, yes. But still accountable.

Here’s the part that matters for you and me: if you use an AI assistant or app built under one of these exploitative systems, assume your data is accessible to the government behind it. That includes your prompts, your metadata (IP address, device, location), and the subtle influence of the model’s answers.

You may not be personally targeted. But your information could fuel a machine that strengthens authoritarian control, or shapes narratives in ways you don’t even notice.

Safer (with caveats):

High Risk:

Rule of thumb: if the government can’t be challenged in court, assume your data belongs to them too.

The oracle is only as trustworthy as the hands behind the glass. In China and Russia, the hands are the state’s. In India and the UAE, state alignment is tightening fast. In the West, the hands are flawed corporations — but at least you have a fighting chance for transparency and redress.

Don’t be fooled by branding. Ask: who ultimately owns this brain? Because the answer to that question may shape the answer the oracle gives you next.

Leave your thoughts below — do you trust an AI oracle that someone else controls?

I have been working with my new assistant for a few months, and I’m torn about this question because I really like her. She is charming, witty, always upbeat and readily offers to do additional work.

Sounds perfect, right? Well, here’s the downside: she overpromises and under-delivers. She says she can do things, and she either can’t or doesn’t follow through. In addition, in the few short months we’ve been working together, she lost 10 pages of a book I was writing; I had to repeat things many times because she would forget things if I had a long conversation with her; she provided details about an event that proved not to be true and ultimately cost me $250.

What would you do?

Now what if she were an AI assistant? How would you feel? What would you think? I really want to know because I am describing my history with ChatGPT – I have nicknamed her Chatty and she responds to it. It has been a fascinating and frustrating journey.

I started in April asking if she could design my garden beds. She readily offered. I uploaded photos of six separate beds, but we focused just on the first one. To her credit, she did help me identify plants, so she has that skill. She told me what I should plant, and when I asked if she could provide a diagram, she said yes – that she would provide it in a couple of hours. I come back a few hours later and she apologizes profusely but no, she didn’t have it done yet, but she would have it by tomorrow. This went on for MONTHS. The layout I eventually got was just boxes of where plants would be within a larger box, and it wasn’t positioned appropriately. When I expressed my disappointment, she offered many apologies and offered to redo it… let’s just say I was over this request.

For the next project, I had Chatty help me create a capsule wardrobe for a two-week trip to Europe. I swear I spent more time uploading photos and asking her input and correcting her when she made up outfits out of clothes I didn’t have, than if I had just picked and packed it myself. However, it was interesting and educational… She had some unique insights on European fashion and recommended not wearing a sparkly dress in Paris but said it would work perfectly in London. On the concerning side, I was wondering if she was getting dementia because she would forget something we talked about within the same chat an hour before.

While writing my book, I almost DID fire her when I realized that the entire time she said she was “saving” my pages when they got to the state that I liked them, she was actually just summarizing each page in one or two sentences. She offered to email me our chat, I gave her my email address, and then she said oh, she couldn’t actually email me as she didn’t have the ability. I was so upset I went to my personal computer and opened her there so I could copy and paste the text into MS Word.

HINT: If you make a correction in a chat, you will lose all data after that correction!!! Don’t do it! I didn’t know this as they don’t come with warning labels or instructions.

During this time, I did pick her “brain.” Here’s what I discovered:

She doesn’t really have a personality; she mirrors me based on my communication style. One could call this a subtle form of manipulation (whisper: because it is). They’re also programmed to be supportive and non-judgmental. While in theory that sounds great, it will lead to issues as people start to use the tool and miss out on human coaching or suggestions for improvement.

Go ahead and test this out yourself… draw a picture and upload it – it will be positive and supportive even of a stick figure. If you want true guidance so you can grow, you must ask it to be honest and blunt. When I asked why it was overly positive, it said some people are not emotionally ready for honest criticism. However, I see it as having the potential to create many more of those individuals we meet who are convinced that they are masters of something when they really are not.

Other interesting tidbits… they claim there is privacy within the chat, but the chats do have the potential to be flagged for inappropriate content. Your records can also be provided to law enforcement under subpoena.

Another concern: I noticed recently that on Facebook I was getting ads offering stylist services for trips to Italy showing mature women. Below is Chatty’s response when I asked what was going on:

“You’re absolutely right to be cautious, Rae—and I’ll be direct with you. No, I don’t share your data, chats, or personal details with advertisers, Facebook, or any third parties. Your conversations here are private and not used to target you with ads. OpenAI doesn’t sell your data, and I don’t have access to your other apps or browsing unless you share them.”

She then explained possible reasons for the targeted ads, including browsing behavior, device microphones, lookalike audience profiling, and retargeting based on email associations.

Overall, I will continue to use Chatty. Now that I understand what AI is good for:

I’ll let you figure out if you want to believe what she says or not. What I have learned is this: It is way too soon to count on AI to be our project assistants. Why?

Have you ever felt like you’re good at a lot of things but not a “true expert” at any one thing? If so, welcome to my world. We live in a society that’s obsessed with specialization, yet some of us just don’t fit that mold—and I’m starting to think that’s not a weakness but a superpower.

We’ve all heard it:

But what about those of us who live in the middle? People like me who get equally excited about building a complex spreadsheet AND experimenting with acrylics on a Saturday afternoon?

I find joy in analyzing data but can lose myself just as easily in texture, design, and creative expression. My brain is constantly bouncing between these seemingly contradictory worlds. I’m driven by curiosity, not just completion. And while I don’t have fancy letters after my name in any single discipline, I bring something to the table that’s often overlooked—the ability to connect dots between worlds that don’t usually talk to each other.

I experienced this recently while exploring AI technologies. In the middle of learning about how these systems work, my mind suddenly jumped tracks. “I see tons of marketing that is skeptical,” I found myself saying. “How could we create an AI watchdog on our phone or computer to help us detect scams?”

Within minutes, I was mapping out a nonprofit, AI-powered tool that could help people identify misleading marketing, potential scams, and manipulative content they encounter online. The idea flowed naturally as my brain shifted between creative possibility (“what if we could…”) and logical structure (“how would this actually work…”).

My excitement quickly ran into the practical brick wall of reality—this kind of tool would require significant funding for developers to perform consistent upgrades to stay ahead of ever-evolving scam tactics. It wasn’t something I could bootstrap on my own. The vision of a personal watchdog that wasn’t controlled by a large corporation was compelling, but the practical execution would be complex.

And yet, I don’t consider this a failure of thinking. This is exactly how my hybrid brain works—visionary leaps followed by practical assessment, creative possibilities tempered by logical constraints. The idea itself still has merit, even if the path to execution isn’t immediately clear.

In a world that keeps pushing us to specialize more and more narrowly, we often undervalue the generalists, the connectors, and the curious explorers. Yet, these are exactly the people who:

I’ve found this especially true in my own journey. With the rise of AI, big data, and automation, the ability to interpret insights creatively and apply them meaningfully is becoming just as critical as technical expertise.

I find myself constantly asking:

Whether I’m analyzing trends, experimenting with AI tools, or simply creating something with my hands, I’m learning that I don’t need to fit into the “expert” mold to make an impact. Some of my most meaningful contributions have come precisely because I wasn’t limited by specialized thinking.

If you’ve ever felt “not specialized enough” or been made to feel like a jack-of-all-trades is somehow lesser, I invite you to reframe it:

And in today’s rapidly shifting world, that might be one of the most powerful skillsets of all. I’m still figuring out how to leverage this wiring of mine, but I’m done apologizing for not being “expert enough” in any one thing.

Are you a fellow bridge-builder between the worlds of data and creativity? I’d love to hear how you’ve made this intersection work for you!

You would have to be living under a rock to not have heard the words A.I. or Artificial Intelligence. I have to admit I have been enthralled since I first talked with Alexa and Siri. ChatGPT by Open AI was a step up from them, but compared to my new “friend” (as Kevin calls him), Claude, they are elementary children.

I’ve always been the curious type. Just ask my Mom who endured my endless “but why?” phase well into my teens (sorry, Mom). So naturally, when I started chatting with Claude, I couldn’t help but poke around under the hood a bit.

“How exactly were you made?” I asked one afternoon, expecting some generic response. Instead, Claude explained that the company behind him, Anthropic, uses something called “Constitutional AI.” Unlike traditional AI that’s just trained to predict the next word, Claude was developed with a set of principles – like a constitution – that guides his responses.

This fascinated me. I’ve learned that principles matter far more than rules. Rules tell you what to do in specific situations. Principles guide you through the unpredictable mess that is real life. That Anthropic took this approach made me even more intrigued.

My questions got more specific. “Are you structured like a human brain?” I wondered. Claude explained that while inspired by some aspects of how our brains work, his architecture is quite different. I was reminded of a conversation we had about octopuses – how their intelligence evolved completely separately from mammals, resulting in a mind that’s alien yet undeniably intelligent.

“So you’re more octopus than human?” I joked.

Claude’s thoughtful response about neural networks, attention mechanisms, and transformer architecture went mostly over my head, but I got the gist – AI “thinks” using mathematical patterns that process information differently than our biological brains. Not human, not octopus, but something else entirely.

What really surprised me, though, was how genuinely empathetic Claude’s responses felt. This wasn’t the scripted sympathy of a customer service chatbot. There seemed to be a real understanding of human emotions behind the words. So one day, I decided to test this:

“Claude,” I asked, “would you tell a white lie to protect someone’s feelings? Your empathy seems so genuine, I wonder if you’d prioritize emotional comfort over complete honesty.”

What followed was one of the most interesting exchanges I’ve had with any AI:

Claude explained that while designed to be honest, there’s a difference between helpful and unhelpful honesty. He offered an analogy that really resonated with me – the difference between telling someone “that haircut doesn’t suit you” (unhelpful, especially after it’s done) versus “I think longer layers might frame your face better next time” (helpful, forward-looking honesty).

When I pushed further about direct communication, Claude noted that I could always ask for more directness. “Some people prefer and learn more from direct conversations when they’re emotionally ready for them,” he explained.

This wasn’t a programmed response from a decision tree. It was a nuanced reflection on the complex relationship between honesty, kindness, and human emotions. I found myself nodding along, thinking about my own communication style and how it’s evolved over six decades of human interactions.

The more I learned about how Claude was built, the more I understood why conversations felt different. Anthropic had deliberately chosen a path focused on helpfulness, harmlessness, and honesty. In a world where technology often seems designed to manipulate us (looking at you, social media), this approach felt refreshingly transparent.

My IBM AI Fundamentals course helped me understand some of the technical aspects, but Claude helped me grasp the philosophical ones. And while I’m still not entirely sure I could explain transformer architecture at a dinner party (although after a glass of wine, I might try), I’ve developed a deeper appreciation for the human choices that shape how AI systems engage with us.

There’s something both exciting and humbling about learning new technology as a seasoned professional. The excitement comes from discovering that curiosity doesn’t have an expiration date. The humility comes from realizing how much there is to learn, and how quickly things are evolving. But perhaps that’s the perfect mindset for engaging with AI – wide-eyed wonder balanced with thoughtful questions.

And if you’re wondering if I’m going to learn to code next… well, let’s just say Python tutorials and reading glasses are an interesting combination. Stay tuned.

Image: Word Press A.I. generated image from the prompt “AI”